Tech Executive, Pioneering Researcher, Leading Innovator

About me

I am a highly motivated, technically experienced executive with nearly 20 years of experience in the field of flow simulation and high performance computing. My work spans a wide range of fluid mechanics applications across aerodynamics and hydrodynamics, and various engineering disciplines, including civil, coastal, marine, and automotive engineering.

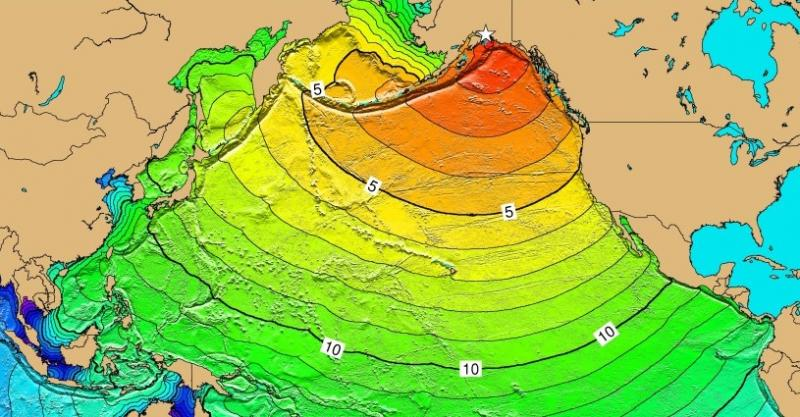

I earned my degrees in Civil Engineering and Computational Sciences in Engineering (CSE) from Braunschweig University of Technology in Germany. In 2010, I completed my Ph.D. focusing on kinetic approaches for the simulation of non-linear free surface flow problems in civil and environmental engineering. After a postdoctoral position in Ocean Engineering at the University of Rhode Island, I returned to Germany, where I led led the development of the GPU-accelerated free surface solver ELBE at Hamburg University of Technology.

In 2017, I joined Altair Engineering, where I now serve as Vice President, CFD Solutions.

My research interests include wall-modeled LES for aerodynamic and hydrodynamic applications, Quantum computing for CFD, machine learning for CFD, and real-time simulation for STEM teaching.

Performance Metrics

50+ Publications

Publishing in scientific journals, conference proceedings, books (List of publications)

30+ Students

Scientific advisor of students on M.Sc. and Ph.D. level.

50+ Talks

Experienced speaker at international conferences and events.

1.3M+ Acquired funding

Proven track record in project acquisition and delivery.

GTC Europe 2016

The elbe team was part of the first European GPU Technology Conference that took place on September …

SMM 2016

Busy 2016! After having successfully organized ICMMES-2016, we’re now contributing to this yea…

ICMMES 2016

We’ve been rather busy lately, as we’re currently organizing the 13th International Conf…

elbe beim Hamburg Innovation Summit 2016

Die elbe-Messetour geht weiter! Besucht uns am 30. Mai 2016 auf dem Hamburg Innovation Summit, der P…

Teaching

Modellierung und Simulation maritimer System (Lehrauftrag, ab SoSe 2018)

Modeling and simulation of maritime systems

In the scope of this lecture, students learn to model and solve selected maritime problems with the help of numerical software and scripts. First, basic concepts of computational modeling are explained, from the physical modeling and discretization to the implementation and actual numerical solution of the problem. Then, available tools for the implementation and solution process are discussed, including high-level compiled programming languages on the one hand, and interpreted programming languages and computer algebra systems on the other hand. In the second half of the class, selected maritime problems will be discussed and subsequently solved numerically by the students.

Lattice-Boltzmann-Methoden für die Simulation von Strömungen mit freien Oberflächen (Lehrauftrag, ab WiSe 2018/19)

Lattice-Boltzmann methods for the simulation of free surface flows

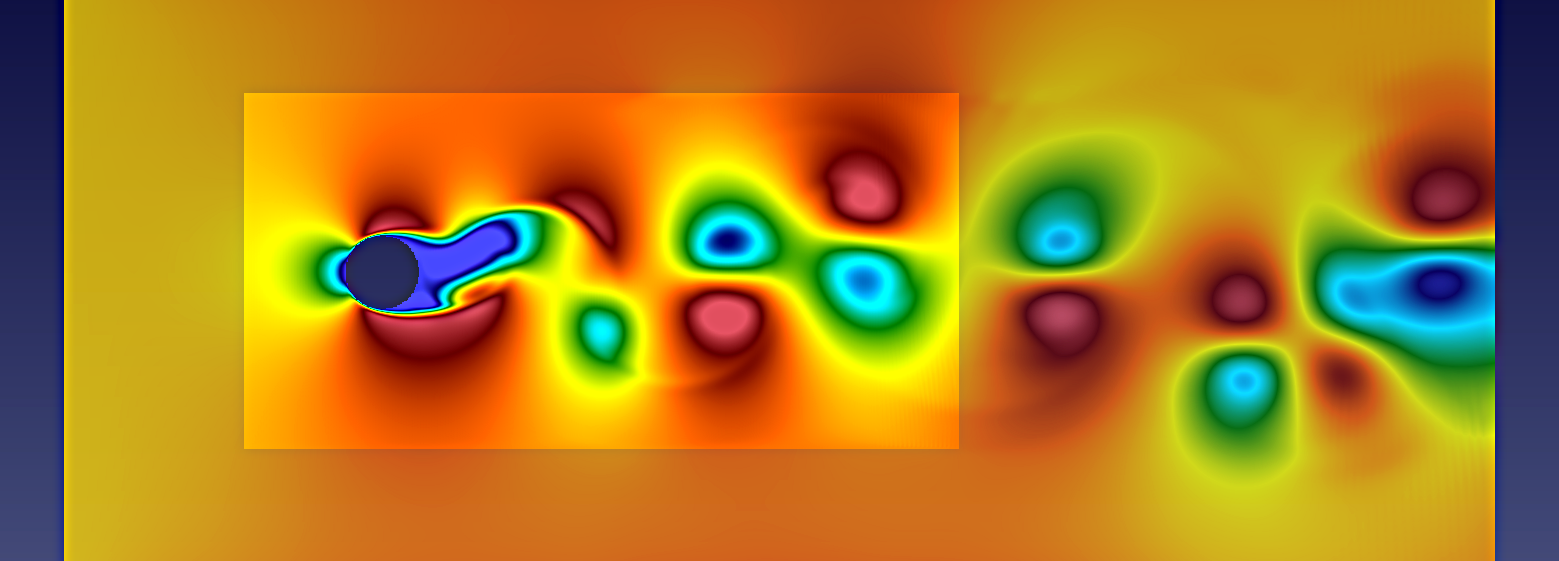

This lecture addresses Lattice Boltzmann Methods for the simulation of free surface flows. After an introduction to the basic concepts of kinetic methods (LGCAs, LBM, ….), recent LBM extensions for the simulation of free-surface flows are discussed. Parallel to the lecture, selected maritime free-surface flow problems are to be solved numerically.

Previous activities include teaching in the fields of partial differential equations, numerical methods, and thermodynamics at Braunschweig Univ of Tech in the group of Prof. Krafczyk (2007-2010), and teaching in the fields of fluid mechanics, numerical methods, and high performance computing at Hamburg Univ of Tech in the group of Prof. Rung (2012-2017).

Send me a message!

I will be happy to hear from you!